Strange Information About Deepseek

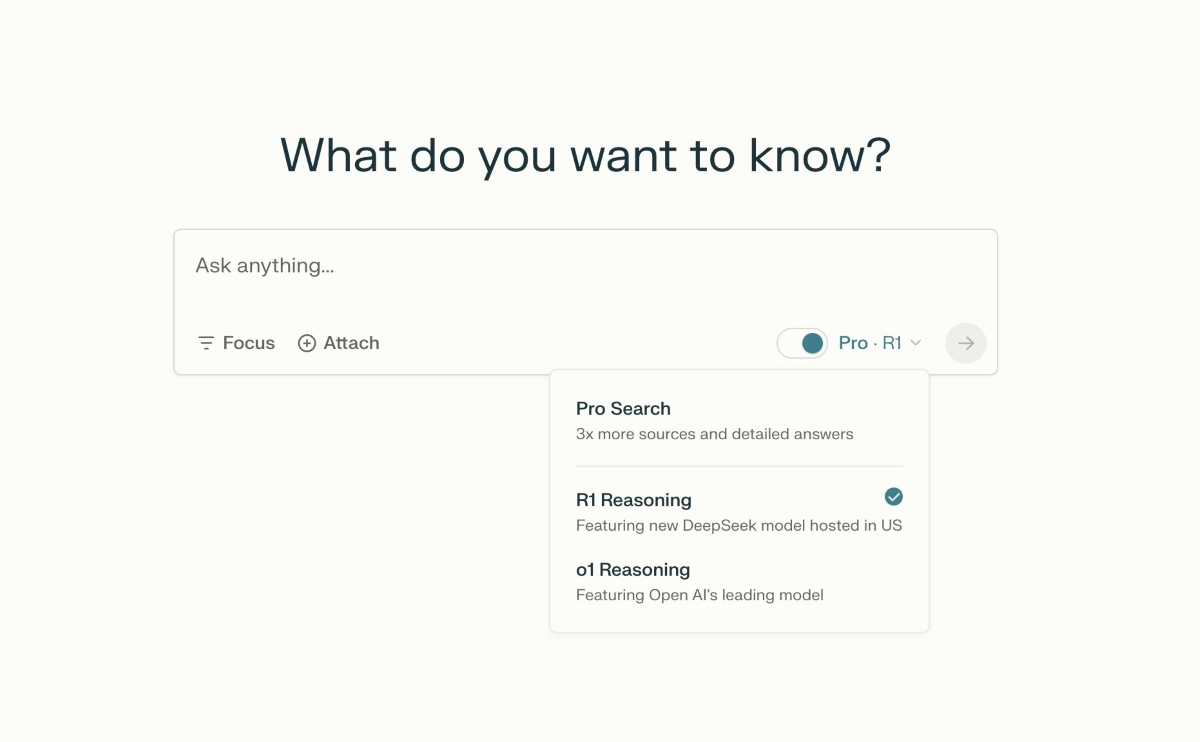

Developers of the system powering the Free DeepSeek Chat AI, referred to as DeepSeek-V3, published a analysis paper indicating that the expertise relies on a lot fewer specialized laptop chips than its U.S. The key thing AI does is it permits me to be horribly flop-inefficient and I love that so much. Persons are naturally attracted to the concept that "first something is costly, then it will get cheaper" - as if AI is a single thing of constant high quality, and when it will get cheaper, we'll use fewer chips to practice it. No idea if how useful this modality actually is. Sully and Logan Kilpatrick speculate there’s a huge market opportunity right here, which appears plausible. Sully having no luck getting Claude’s writing type feature working, whereas system immediate examples work fantastic. After you sends a immediate and click the dropdown, you'll be able to see the reasoning DeepSeek goes via as well. Even if we see relatively nothing: You aint seen nothing but.

Developers of the system powering the Free DeepSeek Chat AI, referred to as DeepSeek-V3, published a analysis paper indicating that the expertise relies on a lot fewer specialized laptop chips than its U.S. The key thing AI does is it permits me to be horribly flop-inefficient and I love that so much. Persons are naturally attracted to the concept that "first something is costly, then it will get cheaper" - as if AI is a single thing of constant high quality, and when it will get cheaper, we'll use fewer chips to practice it. No idea if how useful this modality actually is. Sully and Logan Kilpatrick speculate there’s a huge market opportunity right here, which appears plausible. Sully having no luck getting Claude’s writing type feature working, whereas system immediate examples work fantastic. After you sends a immediate and click the dropdown, you'll be able to see the reasoning DeepSeek goes via as well. Even if we see relatively nothing: You aint seen nothing but.

I actually assume this is nice, because it helps you understand how to work together with different comparable ‘rules.’ Also, while we are able to all see the issue with these statements, some people have to reverse any recommendation they hear. Won’t someone consider the flops? There’s a way during which you need a reasoning mannequin to have a excessive inference cost, because you want a great reasoning model to be able to usefully think virtually indefinitely. I ended up flipping it to ‘educational’ and thinking ‘huh, ok for now.’ Others report mixed success. Wow this is so frustrating, @Verizon cannot inform me anything besides "file a police report" while this remains to be ongoing? Dan Hendrycks factors out that the average individual cannot, by listening to them, tell the difference between a random arithmetic graduate and Terence Tao, and many leaps in AI will feel like that for average individuals. Presumably malicious use of AI will push this to its breaking point rather soon, one way or one other. Nobody needs to be flying blind, if they don’t wish to. Reading this emphasized to me that no, I don’t ‘care about art’ in the sense they’re eager about it right here. I'm confused why we place so little value in the integrity of the telephone system, where the police seem to not care about such violations, and we don’t move to make them tougher to do.

I actually assume this is nice, because it helps you understand how to work together with different comparable ‘rules.’ Also, while we are able to all see the issue with these statements, some people have to reverse any recommendation they hear. Won’t someone consider the flops? There’s a way during which you need a reasoning mannequin to have a excessive inference cost, because you want a great reasoning model to be able to usefully think virtually indefinitely. I ended up flipping it to ‘educational’ and thinking ‘huh, ok for now.’ Others report mixed success. Wow this is so frustrating, @Verizon cannot inform me anything besides "file a police report" while this remains to be ongoing? Dan Hendrycks factors out that the average individual cannot, by listening to them, tell the difference between a random arithmetic graduate and Terence Tao, and many leaps in AI will feel like that for average individuals. Presumably malicious use of AI will push this to its breaking point rather soon, one way or one other. Nobody needs to be flying blind, if they don’t wish to. Reading this emphasized to me that no, I don’t ‘care about art’ in the sense they’re eager about it right here. I'm confused why we place so little value in the integrity of the telephone system, where the police seem to not care about such violations, and we don’t move to make them tougher to do.

Why so aggressive? I do not deny what you've got written within the article, I even agree that folks ought to cease utilizing CRA. Why aren’t things vastly worse? Hume provides Voice Control, permitting you to create new voices by moving ten sliders for issues like ‘gender,’ ‘assertiveness’ and ‘smoothness.’ Looks like a great thought, particularly on the margin if we can decompose present voices into their components. BayesLord: sir the underlying objective perform would like a word. It additionally seems like a transparent case of ‘solve for the equilibrium’ and the equilibrium taking a remarkably long time to be found, even with present levels of AI. This approach helps analyze the strengths (and weaknesses) of every tool - so you know what’s worth your time! There was not less than a short period when ChatGPT refused to say the identify "David Mayer." Many individuals confirmed this was actual, it was then patched however other names (together with ‘Guido Scorza’) have so far as we all know not yet been patched. I am effective. I have no idea what is occurring, but I'm tremendous. The fashions, including Free DeepSeek online-R1, have been released as largely open supply. On Jan. 20, 2025, DeepSeek r1 released its R1 LLM at a fraction of the price that other distributors incurred in their very own developments.

At a supposed price of simply $6 million to prepare, DeepSeek’s new R1 mannequin, launched final week, was in a position to match the performance on several math and reasoning metrics by OpenAI’s o1 mannequin - the end result of tens of billions of dollars in funding by OpenAI and its patron Microsoft. An AI agent primarily based on GPT-4 had one job, to not launch funds, with exponentially growing price to send messages to persuade it to launch funds (70% of the charge went to the prize pool, 30% to the developer). DeepSeek excels in tasks equivalent to arithmetic, math, reasoning, and coding, surpassing even some of the most famous models like GPT-4 and LLaMA3-70B. These models have been pre-educated to excel in coding and mathematical reasoning tasks, attaining performance comparable to GPT-4 Turbo in code-specific benchmarks. This addition not solely improves Chinese a number of-selection benchmarks but in addition enhances English benchmarks. Roon: I heard from an English professor that he encourages his college students to run assignments through ChatGPT to study what the median essay, story, or response to the assignment will appear to be so they can avoid and transcend it all. And as Thomas Woodside factors out, folks will certainly ‘feel the agents’ that result from comparable advances.