8 Warning Signs Of Your Deepseek Demise

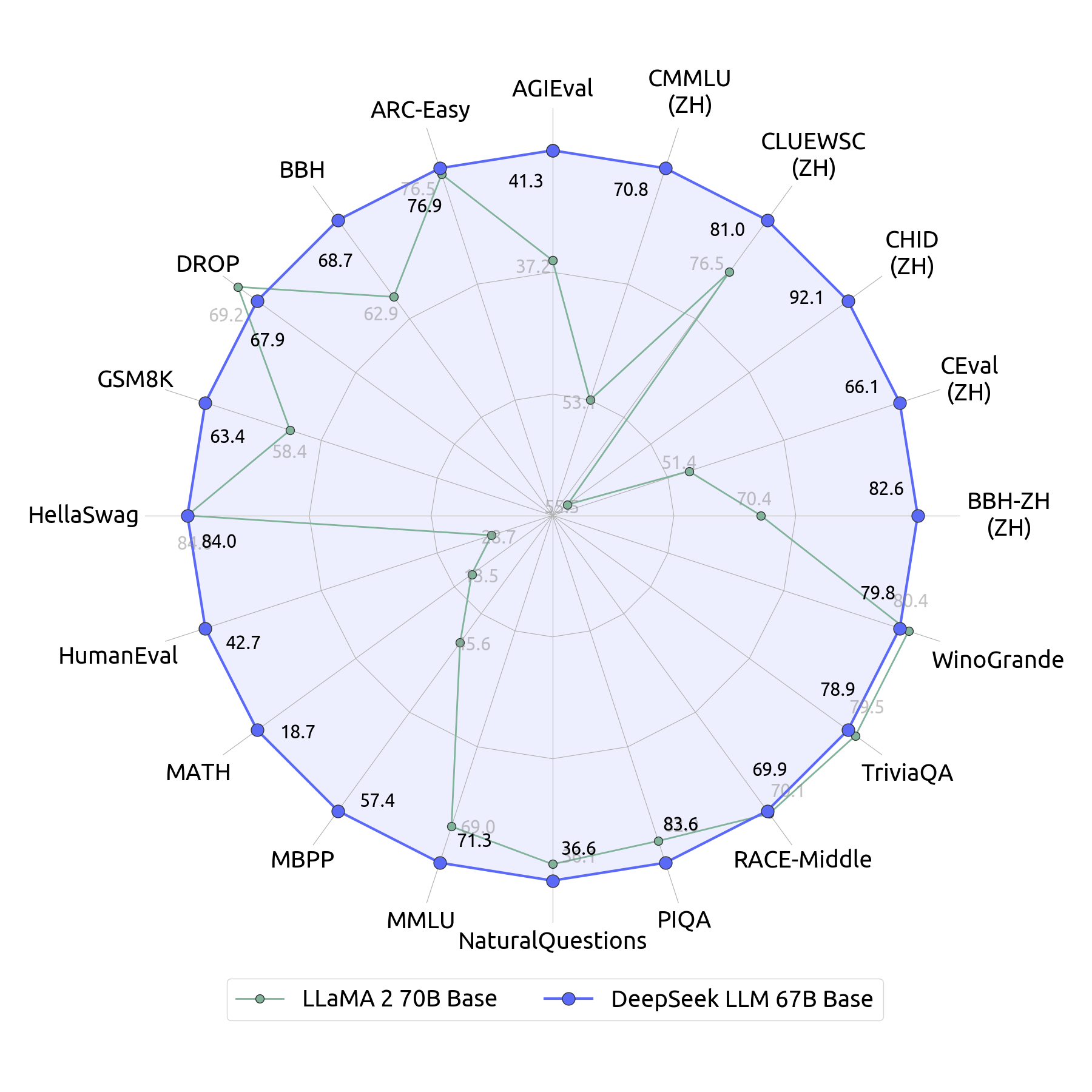

Initially, DeepSeek created their first mannequin with structure similar to different open fashions like LLaMA, aiming to outperform benchmarks. In all of those, DeepSeek V3 feels very capable, however how it presents its info doesn’t feel exactly in line with my expectations from one thing like Claude or ChatGPT. Hence, after ok attention layers, information can move forward by up to k × W tokens SWA exploits the stacked layers of a transformer to attend information beyond the window size W . All content containing personal data or topic to copyright restrictions has been faraway from our dataset. DeepSeek-Coder and DeepSeek-Math were used to generate 20K code-related and 30K math-associated instruction information, then combined with an instruction dataset of 300M tokens. This mannequin was effective-tuned by Nous Research, with Teknium and Emozilla main the effective tuning course of and dataset curation, Redmond AI sponsoring the compute, and a number of other other contributors. Dataset Pruning: Our system employs heuristic guidelines and models to refine our coaching information.

Whether you are an information scientist, enterprise chief, or tech enthusiast, DeepSeek R1 is your ultimate tool to unlock the true potential of your data. Enjoy experimenting with DeepSeek-R1 and exploring the potential of local AI models. By following this information, you've efficiently arrange DeepSeek-R1 in your local machine using Ollama. Let's dive into how you may get this mannequin working in your native system. You may as well comply with me via my Youtube channel. If speaking about weights, weights you possibly can publish immediately. I’d say this save me atleast 10-quarter-hour of time googling for the api documentation and fumbling until I got it right. Depending on your internet pace, this would possibly take some time. This setup presents a robust answer for AI integration, providing privacy, pace, and management over your purposes. BTW, having a strong database in your AI/ML functions is a must. We will probably be utilizing SingleStore as a vector database here to retailer our data. I recommend utilizing an all-in-one data platform like SingleStore.

I constructed a serverless application using Cloudflare Workers and Hono, a lightweight web framework for Cloudflare Workers. Below is an entire step-by-step video of using DeepSeek-R1 for various use circumstances. Or you completely feel like Jayant, who feels constrained to make use of AI? From the outset, it was free deepseek for commercial use and fully open-supply. Because of this, we made the choice to not incorporate MC data within the pre-coaching or nice-tuning course of, as it would lead to overfitting on benchmarks. Say good day to deepseek ai china R1-the AI-powered platform that’s changing the principles of data analytics! So that’s one other angle. We assessed DeepSeek-V2.5 using trade-normal check sets. 4. RL using GRPO in two levels. As you may see if you go to Llama website, you may run the totally different parameters of DeepSeek-R1. As you'll be able to see when you go to Ollama website, you can run the different parameters of DeepSeek-R1. You can run 1.5b, 7b, 8b, 14b, 32b, 70b, 671b and obviously the hardware necessities increase as you select larger parameter.

I constructed a serverless application using Cloudflare Workers and Hono, a lightweight web framework for Cloudflare Workers. Below is an entire step-by-step video of using DeepSeek-R1 for various use circumstances. Or you completely feel like Jayant, who feels constrained to make use of AI? From the outset, it was free deepseek for commercial use and fully open-supply. Because of this, we made the choice to not incorporate MC data within the pre-coaching or nice-tuning course of, as it would lead to overfitting on benchmarks. Say good day to deepseek ai china R1-the AI-powered platform that’s changing the principles of data analytics! So that’s one other angle. We assessed DeepSeek-V2.5 using trade-normal check sets. 4. RL using GRPO in two levels. As you may see if you go to Llama website, you may run the totally different parameters of DeepSeek-R1. As you'll be able to see when you go to Ollama website, you can run the different parameters of DeepSeek-R1. You can run 1.5b, 7b, 8b, 14b, 32b, 70b, 671b and obviously the hardware necessities increase as you select larger parameter.

What's the minimal Requirements of Hardware to run this? With Ollama, you can easily download and run the DeepSeek-R1 model. If you want to increase your learning and construct a simple RAG application, you possibly can observe this tutorial. While a lot attention within the AI neighborhood has been targeted on models like LLaMA and Mistral, DeepSeek has emerged as a major player that deserves closer examination. And just like that, you're interacting with DeepSeek-R1 domestically. DeepSeek-R1 stands out for several reasons. You must see deepseek-r1 in the listing of out there fashions. This paper presents a new benchmark called CodeUpdateArena to judge how properly large language models (LLMs) can replace their data about evolving code APIs, a vital limitation of present approaches. This may be notably useful for those with pressing medical wants. The ethos of the Hermes series of fashions is concentrated on aligning LLMs to the person, with powerful steering capabilities and control given to the top user. End of Model input. This command tells Ollama to obtain the model.

What's the minimal Requirements of Hardware to run this? With Ollama, you can easily download and run the DeepSeek-R1 model. If you want to increase your learning and construct a simple RAG application, you possibly can observe this tutorial. While a lot attention within the AI neighborhood has been targeted on models like LLaMA and Mistral, DeepSeek has emerged as a major player that deserves closer examination. And just like that, you're interacting with DeepSeek-R1 domestically. DeepSeek-R1 stands out for several reasons. You must see deepseek-r1 in the listing of out there fashions. This paper presents a new benchmark called CodeUpdateArena to judge how properly large language models (LLMs) can replace their data about evolving code APIs, a vital limitation of present approaches. This may be notably useful for those with pressing medical wants. The ethos of the Hermes series of fashions is concentrated on aligning LLMs to the person, with powerful steering capabilities and control given to the top user. End of Model input. This command tells Ollama to obtain the model.

If you cherished this write-up and you would like to acquire additional info about ديب سيك مجانا kindly stop by our own web-site.