Compute is all that issues: Philosophically, DeepSeek thinks about the maturity of Chinese AI models when it comes to how effectively they’re in a position to make use of compute. It's also possible to use the model to mechanically job the robots to collect information, which is most of what Google did right here. China’s DeepSeek group have constructed and released DeepSeek-R1, a mannequin that uses reinforcement studying to train an AI system to be ready to use test-time compute. And but, because the AI technologies get better, they become more and more related for the whole lot, including makes use of that their creators each don’t envisage and likewise may discover upsetting. "We don’t have quick-term fundraising plans. If you would like to track whoever has 5,000 GPUs in your cloud so you've gotten a way of who's capable of training frontier models, that’s relatively easy to do. "Smaller GPUs current many promising hardware characteristics: they've a lot lower cost for fabrication and packaging, larger bandwidth to compute ratios, lower energy density, and lighter cooling requirements". That is lower than 10% of the price of Meta’s Llama." That’s a tiny fraction of the hundreds of hundreds of thousands to billions of dollars that US firms like Google, Microsoft, xAI, and OpenAI have spent coaching their models.

Compute is all that issues: Philosophically, DeepSeek thinks about the maturity of Chinese AI models when it comes to how effectively they’re in a position to make use of compute. It's also possible to use the model to mechanically job the robots to collect information, which is most of what Google did right here. China’s DeepSeek group have constructed and released DeepSeek-R1, a mannequin that uses reinforcement studying to train an AI system to be ready to use test-time compute. And but, because the AI technologies get better, they become more and more related for the whole lot, including makes use of that their creators each don’t envisage and likewise may discover upsetting. "We don’t have quick-term fundraising plans. If you would like to track whoever has 5,000 GPUs in your cloud so you've gotten a way of who's capable of training frontier models, that’s relatively easy to do. "Smaller GPUs current many promising hardware characteristics: they've a lot lower cost for fabrication and packaging, larger bandwidth to compute ratios, lower energy density, and lighter cooling requirements". That is lower than 10% of the price of Meta’s Llama." That’s a tiny fraction of the hundreds of hundreds of thousands to billions of dollars that US firms like Google, Microsoft, xAI, and OpenAI have spent coaching their models.

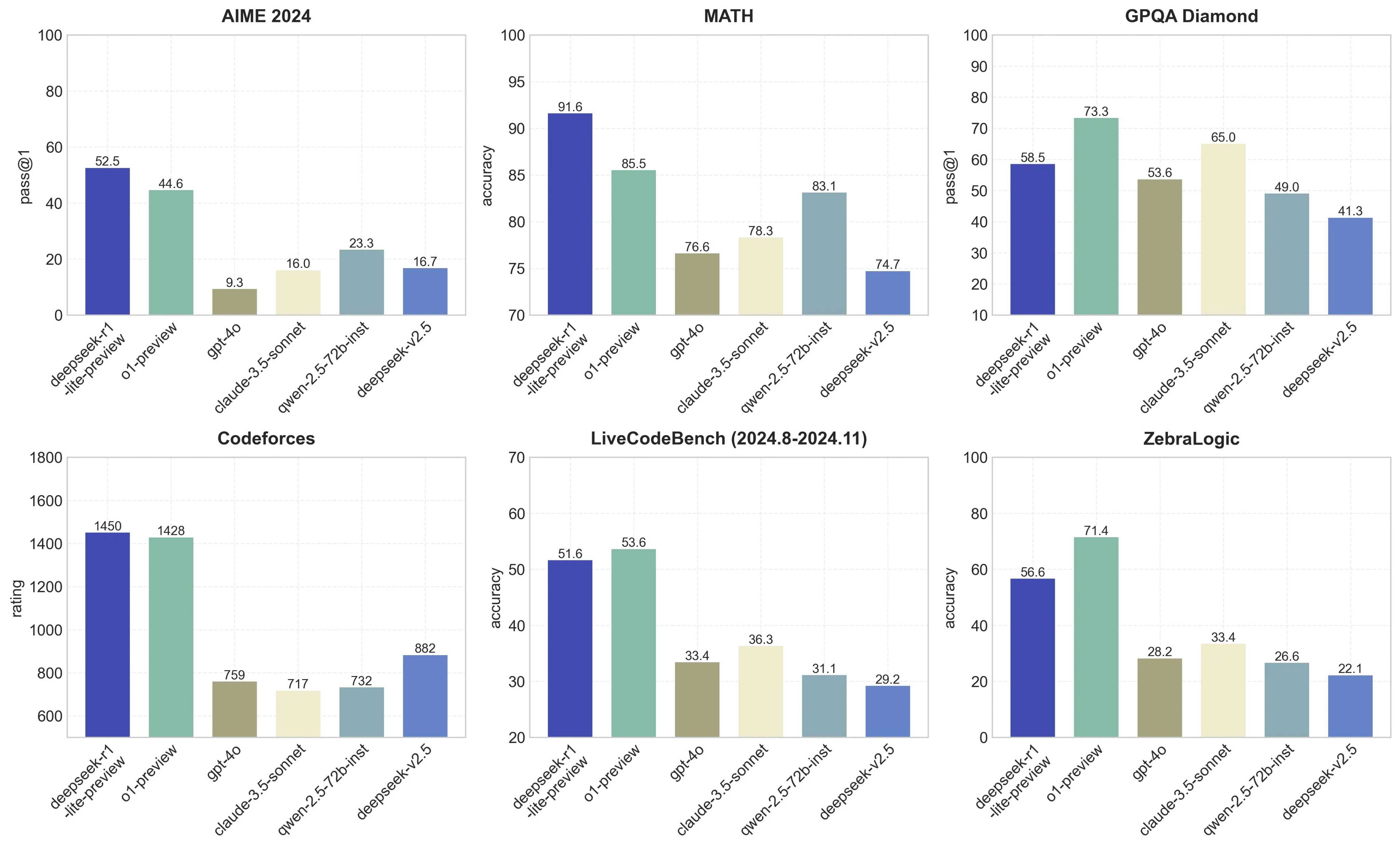

Its performance is comparable to main closed-source fashions like GPT-4o and Claude-Sonnet-3.5, narrowing the hole between open-source and closed-supply models on this domain. Additionally, there’s about a twofold gap in knowledge effectivity, meaning we need twice the coaching data and computing power to achieve comparable outcomes. "This means we'd like twice the computing power to attain the identical results. Why this matters - decentralized coaching could change plenty of stuff about AI coverage and energy centralization in AI: Today, affect over AI growth is determined by folks that may entry enough capital to accumulate enough computer systems to prepare frontier fashions. They’re also higher on an power point of view, producing much less heat, making them easier to power and integrate densely in a datacenter. We consider the pipeline will profit the trade by creating better models. Researchers with University College London, Ideas NCBR, the University of Oxford, New York University, and Anthropic have built BALGOG, a benchmark for visual language fashions that exams out their intelligence by seeing how effectively they do on a set of text-journey video games. Get the benchmark right here: BALROG (balrog-ai, GitHub).

Its performance is comparable to main closed-source fashions like GPT-4o and Claude-Sonnet-3.5, narrowing the hole between open-source and closed-supply models on this domain. Additionally, there’s about a twofold gap in knowledge effectivity, meaning we need twice the coaching data and computing power to achieve comparable outcomes. "This means we'd like twice the computing power to attain the identical results. Why this matters - decentralized coaching could change plenty of stuff about AI coverage and energy centralization in AI: Today, affect over AI growth is determined by folks that may entry enough capital to accumulate enough computer systems to prepare frontier fashions. They’re also higher on an power point of view, producing much less heat, making them easier to power and integrate densely in a datacenter. We consider the pipeline will profit the trade by creating better models. Researchers with University College London, Ideas NCBR, the University of Oxford, New York University, and Anthropic have built BALGOG, a benchmark for visual language fashions that exams out their intelligence by seeing how effectively they do on a set of text-journey video games. Get the benchmark right here: BALROG (balrog-ai, GitHub).

""BALROG is troublesome to solve by simple memorization - all of the environments used within the benchmark are procedurally generated, and encountering the same instance of an atmosphere twice is unlikely," they write. Why this matters - text games are laborious to be taught and will require wealthy conceptual representations: Go and play a textual content adventure recreation and discover your own experience - you’re both studying the gameworld and ruleset whereas additionally building a wealthy cognitive map of the surroundings implied by the text and the visible representations. DeepSeek basically took their present superb model, built a wise reinforcement learning on LLM engineering stack, then did some RL, then they used this dataset to turn their model and other good models into LLM reasoning models. Read extra: BALROG: Benchmarking Agentic LLM and VLM Reasoning On Games (arXiv). DeepSeek-R1-Zero, a model skilled via giant-scale reinforcement studying (RL) with out supervised positive-tuning (SFT) as a preliminary step, demonstrated remarkable efficiency on reasoning. DeepSeek additionally lately debuted DeepSeek-R1-Lite-Preview, a language mannequin that wraps in reinforcement studying to get better efficiency.

Instruction-following evaluation for large language models. Pretty good: They practice two forms of mannequin, ديب سيك مجانا a 7B and a 67B, then they evaluate efficiency with the 7B and 70B LLaMa2 models from Facebook. They'd made no attempt to disguise its artifice - it had no defined options apart from two white dots where human eyes would go. Then he opened his eyes to look at his opponent. Inside he closed his eyes as he walked in direction of the gameboard. The resulting dataset is more diverse than datasets generated in more mounted environments. Finally, we are exploring a dynamic redundancy technique for specialists, where each GPU hosts extra experts (e.g., 16 specialists), but solely 9 shall be activated during each inference step. We are additionally exploring the dynamic redundancy strategy for decoding. Auxiliary-loss-free load balancing technique for mixture-of-experts. LLM: Support deepseek ai china-V3 mannequin with FP8 and BF16 modes for tensor parallelism and pipeline parallelism.