TheBloke/deepseek-coder-33B-instruct-GGUF · Hugging Face

DeepSeek Coder makes use of the HuggingFace Tokenizer to implement the Bytelevel-BPE algorithm, with specially designed pre-tokenizers to ensure optimal performance. However, we observed that it does not improve the model's information performance on other evaluations that do not make the most of the multiple-choice type within the 7B setting. Please use our setting to run these fashions. Using deepseek ai china-V2 Base/Chat fashions is subject to the Model License. We consider our model on LiveCodeBench (0901-0401), a benchmark designed for reside coding challenges. Based on our experimental observations, we have now found that enhancing benchmark performance using multi-choice (MC) questions, comparable to MMLU, CMMLU, and C-Eval, is a comparatively simple job. When using vLLM as a server, go the --quantization awq parameter. To facilitate the efficient execution of our mannequin, we provide a devoted vllm answer that optimizes performance for operating our mannequin effectively. I'll consider including 32g as effectively if there's curiosity, and once I've executed perplexity and analysis comparisons, however presently 32g models are still not totally examined with AutoAWQ and vLLM. Some GPTQ shoppers have had issues with fashions that use Act Order plus Group Size, however this is mostly resolved now.

DeepSeek Coder makes use of the HuggingFace Tokenizer to implement the Bytelevel-BPE algorithm, with specially designed pre-tokenizers to ensure optimal performance. However, we observed that it does not improve the model's information performance on other evaluations that do not make the most of the multiple-choice type within the 7B setting. Please use our setting to run these fashions. Using deepseek ai china-V2 Base/Chat fashions is subject to the Model License. We consider our model on LiveCodeBench (0901-0401), a benchmark designed for reside coding challenges. Based on our experimental observations, we have now found that enhancing benchmark performance using multi-choice (MC) questions, comparable to MMLU, CMMLU, and C-Eval, is a comparatively simple job. When using vLLM as a server, go the --quantization awq parameter. To facilitate the efficient execution of our mannequin, we provide a devoted vllm answer that optimizes performance for operating our mannequin effectively. I'll consider including 32g as effectively if there's curiosity, and once I've executed perplexity and analysis comparisons, however presently 32g models are still not totally examined with AutoAWQ and vLLM. Some GPTQ shoppers have had issues with fashions that use Act Order plus Group Size, however this is mostly resolved now.

In March 2022, High-Flyer suggested sure purchasers that have been delicate to volatility to take their money back as it predicted the market was extra likely to fall further. OpenAI CEO Sam Altman has stated that it price greater than $100m to prepare its chatbot GPT-4, whereas analysts have estimated that the model used as many as 25,000 more superior H100 GPUs. It contained 10,000 Nvidia A100 GPUs. DeepSeek (Chinese AI co) making it look easy at this time with an open weights launch of a frontier-grade LLM skilled on a joke of a budget (2048 GPUs for 2 months, $6M). Massive Training Data: Trained from scratch on 2T tokens, including 87% code and 13% linguistic data in both English and Chinese languages. This addition not only improves Chinese multiple-alternative benchmarks but additionally enhances English benchmarks. 1. Pretrain on a dataset of 8.1T tokens, the place Chinese tokens are 12% greater than English ones.

In March 2022, High-Flyer suggested sure purchasers that have been delicate to volatility to take their money back as it predicted the market was extra likely to fall further. OpenAI CEO Sam Altman has stated that it price greater than $100m to prepare its chatbot GPT-4, whereas analysts have estimated that the model used as many as 25,000 more superior H100 GPUs. It contained 10,000 Nvidia A100 GPUs. DeepSeek (Chinese AI co) making it look easy at this time with an open weights launch of a frontier-grade LLM skilled on a joke of a budget (2048 GPUs for 2 months, $6M). Massive Training Data: Trained from scratch on 2T tokens, including 87% code and 13% linguistic data in both English and Chinese languages. This addition not only improves Chinese multiple-alternative benchmarks but additionally enhances English benchmarks. 1. Pretrain on a dataset of 8.1T tokens, the place Chinese tokens are 12% greater than English ones.

DeepSeek (Chinese: 深度求索; pinyin: Shēndù Qiúsuǒ) is a Chinese synthetic intelligence (abbreviated A.I. DeepSeek has made its generative synthetic intelligence chatbot open source, which means its code is freely obtainable to be used, modification, and viewing. DeepSeek makes its generative synthetic intelligence algorithms, models, and training particulars open-source, allowing its code to be freely obtainable for use, modification, viewing, and designing documents for constructing purposes. This consists of permission to access and use the supply code, in addition to design paperwork, for building functions. DeepSeek-R1 achieves performance comparable to OpenAI-o1 throughout math, code, and reasoning tasks. Our pipeline elegantly incorporates the verification and reflection patterns of R1 into DeepSeek-V3 and notably improves its reasoning performance. At an economical price of solely 2.664M H800 GPU hours, we complete the pre-training of DeepSeek-V3 on 14.8T tokens, producing the presently strongest open-source base model. DeepSeek-V3 makes use of significantly fewer sources compared to its peers; for example, whereas the world's leading A.I. For example, healthcare suppliers can use DeepSeek to investigate medical photographs for early diagnosis of diseases, whereas safety companies can improve surveillance methods with actual-time object detection. Lucas Hansen, co-founder of the nonprofit CivAI, said while it was tough to know whether or not DeepSeek circumvented US export controls, the startup’s claimed coaching price range referred to V3, which is roughly equivalent to OpenAI’s GPT-4, not R1 itself.

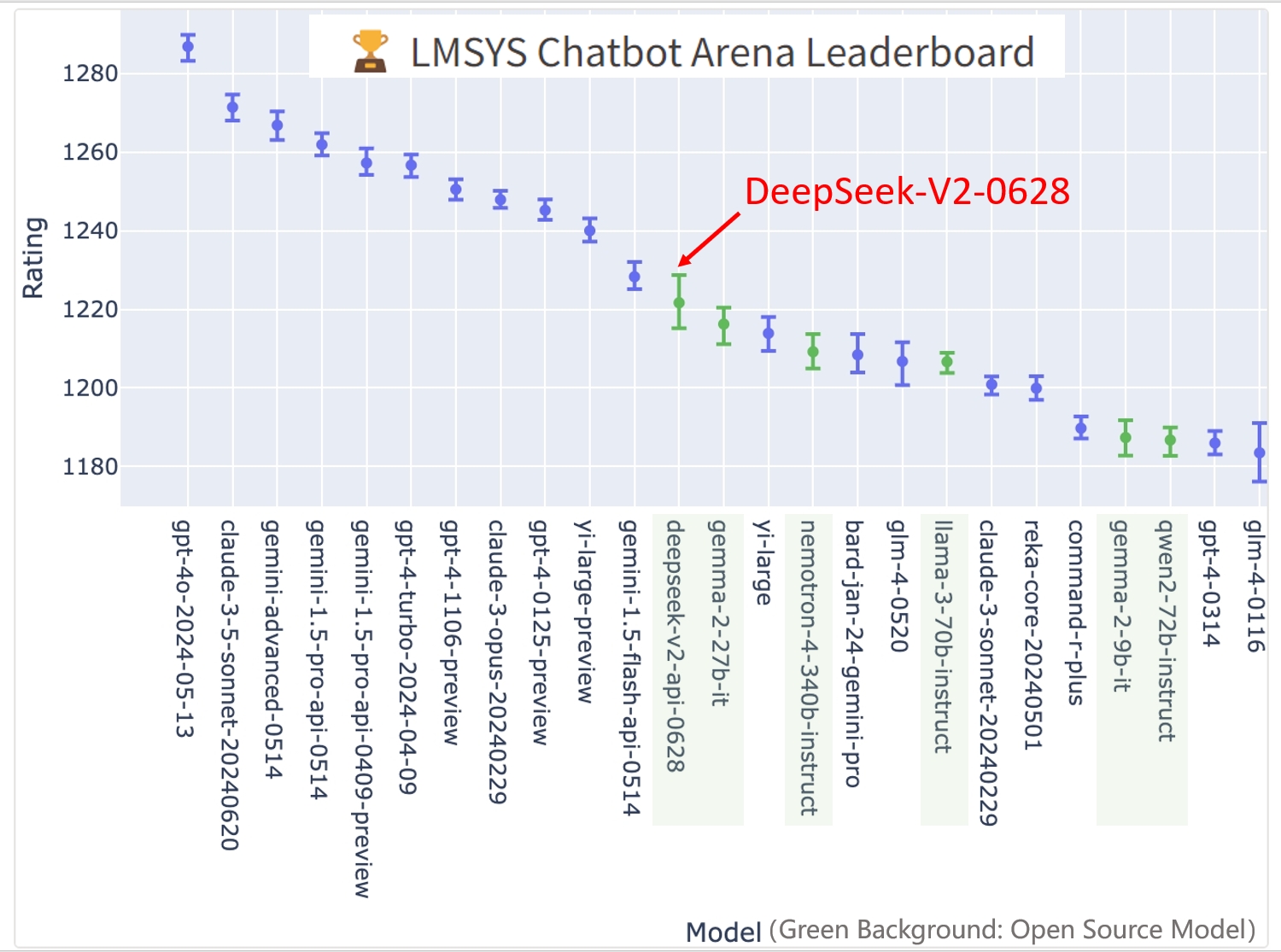

The 7B model utilized Multi-Head consideration, while the 67B mannequin leveraged Grouped-Query Attention. What’s new: DeepSeek announced DeepSeek-R1, a mannequin household that processes prompts by breaking them down into steps. Unlike o1-preview, which hides its reasoning, at inference, DeepSeek-R1-lite-preview’s reasoning steps are visible. In accordance with DeepSeek, R1-lite-preview, using an unspecified variety of reasoning tokens, outperforms OpenAI o1-preview, OpenAI GPT-4o, Anthropic Claude 3.5 Sonnet, Alibaba Qwen 2.5 72B, and DeepSeek-V2.5 on three out of six reasoning-intensive benchmarks. Models are pre-skilled utilizing 1.8T tokens and a 4K window dimension in this step. Each mannequin is pre-educated on mission-stage code corpus by employing a window size of 16K and a extra fill-in-the-blank process, to help undertaking-level code completion and infilling. 3. Repetition: The mannequin may exhibit repetition of their generated responses. After releasing DeepSeek-V2 in May 2024, which supplied robust efficiency for a low price, DeepSeek became recognized as the catalyst for China's A.I. K), a lower sequence length may have for use.

Comments

- Discover Fast and Easy Loans Anytime with the EzLoan Platform

- You'll Never Guess This Windows And Doors Near Me's Tricks

- Nine Things That Your Parent Teach You About Window & Door

- Scam Verification for Gambling Sites Made Easy with toto79.in

- Guide To Aluminium Doors And Windows: The Intermediate Guide The Steps To Aluminium Doors And Windows

- How Good are The Models?

- slot thailand

- 글이 없습니다.